In the ever-evolving landscape of search engine optimization (SEO), the importance of well-structured XML sitemaps and Robots.txt files cannot be overstated. These foundational elements serve as essential guides, directing search engine crawlers to effectively navigate your website, index your content, and ultimately boost your visibility in search results. However, as the digital ecosystem becomes increasingly complex, conventional approaches to optimizing these tools may no longer suffice. Enter the transformative power of artificial intelligence (AI). This article delves into how innovative AI technologies are reshaping the way we enhance XML sitemaps and Robots.txt configurations, providing webmasters and digital marketers with strategies to optimize their online presence like never before. Join us as we explore practical applications, emerging trends, and the future of SEO in the age of AI.

table of Contents

- Strategies for Optimizing XML Sitemaps with AI Technology

- Leveraging AI to Automate Robots.txt Creation and Management

- Enhancing crawl Efficiency through Intelligent Sitemap Prioritization

- Monitoring and Adapting XML Sitemaps and Robots.txt with AI Insights

- In Retrospect

Strategies for Optimizing XML Sitemaps with AI Technology

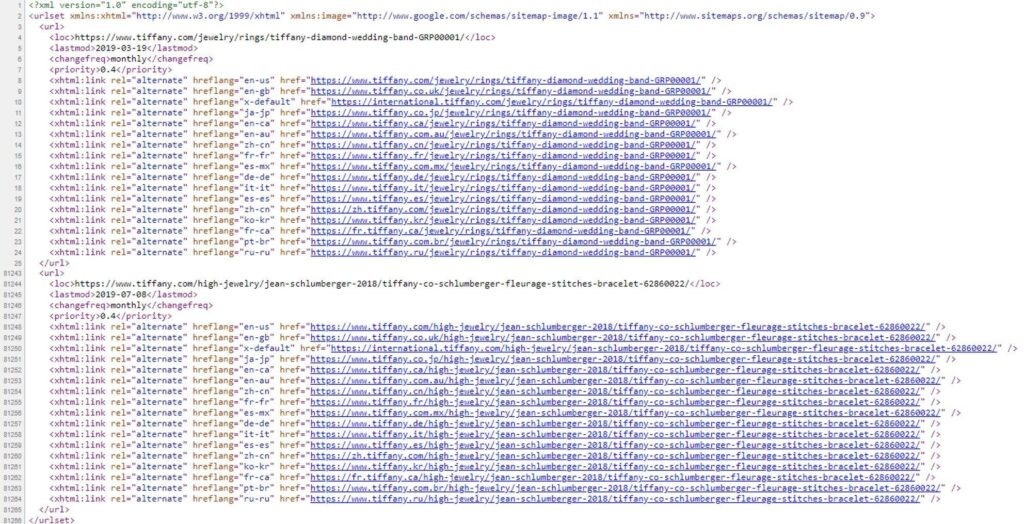

incorporating AI technology into the optimization of XML sitemaps can significantly enhance website visibility and indexing efficiency. One effective strategy is to leverage machine learning algorithms to analyze user behavior and determine which pages generate the most engagement. By prioritizing these pages in your XML sitemap, you can ensure search engines crawl and index your most valuable content first, thereby improving your site’s overall SEO performance. Furthermore, AI can help automate updates to your sitemap based on changes in content, ensuring that your sitemap always reflects the most relevant facts.

Another innovative approach involves utilizing AI-driven tools to audit and refine your existing sitemaps. These tools can identify duplicate URLs, broken links, and outdated content, providing actionable insights for betterment. By implementing a streamlined process for maintaining your sitemaps,you enhance the chances of better search performances. Additionally, consider using AI to generate keyword-rich tags for your URLs, aiding search engines in understanding the context of your pages. This synergy between human insight and AI efficiency ensures that your sitemaps are not only comprehensive but also strategically aligned with search intent.

Leveraging AI to Automate Robots.txt Creation and Management

In the rapidly evolving digital landscape, creating and managing a robots.txt file can often be a tedious task, drawing away resources that could be better utilized elsewhere.By integrating AI-driven solutions, businesses can automate the generation and optimization of their robots.txt files with ease. These advanced systems analyze content priorities and crawling patterns, allowing for a streamlined approach to guiding search engine bots. The automation process involves:

- Real-time Updates: AI tools can adapt the robots.txt in real-time as website content evolves, ensuring no essential areas are inadvertently blocked.

- Data analytics: AI can analyze user behavior and search engine data, suggesting modifications to optimize visibility and crawl efficiency.

- Compliance Checks: Regular reviews ensure your robots.txt complies with search engine guidelines, minimizing the risk of penalties.

Additionally, the implementation of AI in robots.txt management can significantly reduce human error, a common pitfall in manual edits. By leveraging predictive algorithms, these innovative tools can assess potential impacts before changes go live.The benefits of incorporating AI for this purpose include:

- Improved Accuracy: Minimized risk of misconfigurations that could harm site traffic.

- Scalability: Efficiently manage multiple robots.txt files across various subdomains or international sites.

- Enhanced Performance Tracking: Gain insights into how changes to robots.txt affect site indexing and search performance.

Enhancing Crawl Efficiency through Intelligent Sitemap Prioritization

Incorporating AI-driven strategies into your XML sitemaps allows for enhanced prioritization, ensuring that the most critical pages of your website receive the attention they deserve from search engines. By analyzing user behavior and engagement metrics,machine learning algorithms can assess which pages hold the most value based on various factors,such as potential conversion rates and user retention. This intelligent approach can direct crawlers to focus on high-priority content, improving the overall efficiency of the crawling process. Consider applying dynamic prioritization techniques that adapt in real-time,aligning sitemap updates with changes in site performance and relevance.

To implement these innovations effectively, you can develop a comprehensive priority scoring system that ranks URLs based on several criteria. This system may include factors such as:

- page Views: Assessing the number of visits to determine current popularity.

- Conversion potential: evaluating which pages led to conversions based on historical data.

- Content Freshness: Prioritizing recently updated content to ensure search engines crawl the latest information.

Utilizing this structured approach not only increases the efficiency of crawlers but also enhances the visibility of essential content,thereby maximizing organic traffic potential. Below is a simple table illustrating a sample priority scoring layout:

| URL | Score | Last Updated |

|---|---|---|

| /product-a | 85 | 2023-10-01 |

| /blog-post-xyz | 72 | 2023-09-15 |

| /about-us | 62 | 2023-08-20 |

Monitoring and Adapting XML Sitemaps and Robots.txt with AI Insights

Implementing AI insights can revolutionize how businesses monitor and adapt their XML sitemaps and robots.txt files. By analyzing traffic patterns and search engine crawls, AI can identify which pages are most valuable and were search engines might be struggling to access content. This leads to more efficient sitemaps that prioritize important URLs while ensuring less relevant pages aren’t wasting crawl budget.Key benefits of this approach include:

- Enhanced visibility: AI tools can pinpoint optimal sitemap structures, increasing the likelihood of key pages ranking higher.

- Real-time updates: Automated systems can adjust sitemaps dynamically as website content evolves,ensuring the most current pages are always prioritized.

- Data-driven decisions: AI-powered analytics lend insight into user engagement, informing adjustments to both sitemaps and robots.txt configurations.

Moreover,leveraging machine learning alongside traditional data analysis tools allows for more effective management of robots.txt files. Understanding user behavior and search engine responses helps optimize directives for search engine crawlers. for instance, regular evaluations may uncover needless restrictions or underutilized paths. A sample review table could look like this:

| Page URL | Current Status | recommended Action |

|---|---|---|

| /products | Crawl Allowed | Enhance Sitemap Priority |

| /old-page | crawl Disallowed | Remove from robots.txt |

| /blog | Crawl Allowed | Add to Sitemap |

In Retrospect

as we venture deeper into the digital age, the integration of AI into our SEO strategies becomes not just a competitive edge but a necessity. By enhancing XML sitemaps and optimizing robots.txt files with innovative AI solutions, we can ensure that our websites are better crawled, more efficiently indexed, and effectively positioned in search results.

Embracing these technologies not only streamlines our workflow but also enriches the user experience by creating more coherent pathways for search engines to follow. As algorithms evolve and the online landscape continues to shift, staying abreast of these advancements will empower you to adapt and thrive in a realm that rewards agility and foresight.

So, whether you’re a seasoned digital marketer or a newcomer to the field, harnessing the power of AI in your sitemap and robots.txt strategies will help you unlock new levels of visibility and success. Keep exploring,experimenting,and embracing these innovations—your website’s performance and your audience will thank you!

Thank you for reading,and don’t forget to subscribe for more insights into elevating your digital presence.